Artificial Intelligence

Related Terms

- autonomous creative intelligence

- autonomous generated intelligence

- machine intelligence (to de-emphasise the comparison to human intelligence)

- decision support systems

- Computing

Long-term impacts:

- science

- cooperation

- power

- epistemics

- values

Clarke, Sam, and Jess Whittlestone. ‘A Survey of the Potential Long-Term Impacts of AI: How AI Could Lead to Long-Term Changes in Science, Cooperation, Power, Epistemics and Values’. In Proceedings of the 2022 AAAI/ACM Conference on AI, Ethics, and Society, 192–202. AIES ’22. New York: Association for Computing Machinery, 2022. https://doi.org/10/grntdj.

A political and material perspective by a Microsoft researcher. Critical but does not account for nonhuman life.

Crawford, Kate. Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence. New Haven: Yale University Press, 2021.

Overviews and Taxonomies

Methodological

- Symbolic/GOFAI (logic, rules, knowledge graphs, planning)

- Probabilistic (Bayesian nets, graphical models, decision theory)

- Connectionist (ANNs—including deep learning)

- Evolutionary & program synthesis (genetic programming, autoML)

- Hybrid/neurosymbolic (explicit reasoning over learned representations)

Helps avoid “deep learning = AI” reductionism and maps better onto design choices in complex projects.

Agent/Environment

Classify agents and task environments using PEAS (Performance, Environment, Actuators, Sensors) and agent types (reflex, model-based, goal-based, utility-based, learning).

Technique-agnostic; applies equally to robots, software agents, and human-machine collectives.

Russell, Stuart, and Peter Norvig. Artificial Intelligence: A Modern Approach. 1995. 4th ed. Harlow: Pearson Education, 2021.

Narrow/General

Classify systems by breadth of tasks and skill level (Emerging → Competent → Expert → Virtuoso → Superhuman), possibly with a separate autonomy axis. Compares systems to human performance, which is commonly expected.

Levels of Analysis

Use Marr’s three levels to talk about any information-processing system (biological, artificial, collective):

- Computational (goal/problem): What is solved (e.g., prediction under uncertainty)

- Algorithmic/representational: How information is encoded and transformed

- Implementational: Where it’s realized (silicon, neurons, organizations)

Bechtel, William, and Oron Shagrir. “The Non-Redundant Contributions of Marr’s Three Levels of Analysis for Explaining Information-Processing Mechanisms.” Topics in Cognitive Science 7, no. 2 (2015): 312–22. https://doi.org/10/f7bfmb.

Information-theoretical

Organize “intelligence” around information compression, uncertainty, and description length

Natural Computation

The info‑computational view treats nature as networks of concurrent information processes. It situates brains, machines, and organizations as different realisations of information dynamics.

Dodig-Crnkovic, Gordana, and Raffaela Giovagnoli, eds. Representation and Reality in Humans, Other Living Organisms and Intelligent Machines. Cham: Springer, 2017.

Nonhumans and AI

Cf. sentience and smart city.

On 'AI for the universe' (how does this compare with the calls for universal consideration, Earth jurisprudence, etc.?):

This is the argument for moral consideration for the nonhumans in AI ethics, progressive but can be further extended to ward against sentientist and paternalistic biases:

Owe, Andrea, and Seth D. Baum. “Moral Consideration of Nonhumans in the Ethics of Artificial Intelligence.” _AI and Ethics 1, no. 4 (2021): 517–28. https://doi.org/10/gkfhxx.

Baum, Seth D., and Andrea Owe. “Artificial Intelligence Needs Environmental Ethics.” Ethics, Policy & Environment, 2022, 1–5. https://doi.org/10/gp8hrr.

Baum, Seth D., and Andrea Owe. “From AI for People to AI for the World and the Universe.” AI & Society, 2022. https://doi.org/10/gp8hr7.

For an overview:

Dubber, Markus Dirk, Frank Pasquale, and Sunit Das, eds. The Oxford Handbook of Ethics of AI. New York: Oxford University Press, 2020.

Galaz, Victor, Miguel A. Centeno, Peter W. Callahan, Amar Causevic, Thayer Patterson, Irina Brass, Seth Baum, et al. “Artificial Intelligence, Systemic Risks, and Sustainability.” Technology in Society 67 (2021): 101741. https://doi.org/10/gmv5d7.

Halsband, Aurélie. “Sustainable AI and Intergenerational Justice.” Sustainability 14, no. 7 (2022): 3922. https://doi.org/10/gp8hqh.

"A smart initiative is smarter if it connects across all S-E-T subsystems". Good as an example of a baseline now. Does not consider nonhuman agency or issues of justice for nonhuman beings.

Branny, Artur, Maja Steen Møller, Silviya Korpilo, Timon McPhearson, Natalie Gulsrud, Anton Stahl Olafsson, Christopher M Raymond, and Erik Andersson. “Smarter Greener Cities Through a Social-Ecological-Technological Systems Approach.” Current Opinion in Environmental Sustainability 55 (2022): 101168. https://doi.org/10/gp8fgt.

Chapman, Melissa, Caleb Scoville, Marcus Lapeyrolerie, and Carl Boettiger. “Power and Accountability in Reinforcement Learning Applications to Environmental Policy.” arXiv, 2022.

Three dimensions of governance: (1) seeing and knowing, (2) participation and engagement, and (3) interventions and actions. Critical perspective on the shifts due to digital technologies.

Kloppenburg, Sanneke, Aarti Gupta, Sake R. L. Kruk, Stavros Makris, Robert Bergsvik, Paulan Korenhof, Helena Solman, and Hilde M. Toonen. “Scrutinizing Environmental Governance in a Digital Age: New Ways of Seeing, Participating, and Intervening.” One Earth 5, no. 3 (2022): 232–41. https://doi.org/10/gp8hq6.

Bossert, Leonie, and Thilo Hagendorff. “Animals and AI: The Role of Animals in AI Research and Application – an Overview and Ethical Evaluation.” Technology in Society 67 (2021): 101678. https://doi.org/10/gp8qft.

On the anthropocentrism of AI ethics, among other things:

Hagendorff, Thilo. “Blind Spots in AI Ethics.” AI and Ethics, 2021. https://doi.org/10/gp8hr3.

On the speciesist biases in AI:

Hagendorff, Thilo, Leonie Bossert, Tse Yip Fai, and Peter Singer. “Speciesist Bias in AI: How AI Applications Perpetuate Discrimination and Unfair Outcomes Against Animals.” arXiv, 2022.

On the bias in human language about animals:

Takeshita, Masashi, Rafal Rzepka, and Kenji Araki. “Speciesist Language and Nonhuman Animal Bias in English Masked Language Models.” arXiv, 2022.

AI to recognise animal subjective experience in welfare (an example for critical/ethical analysis):

Neethirajan, Suresh. “The Use of Artificial Intelligence in Assessing Affective States in Livestock.” Frontiers in Veterinary Science 8 (2021): 715261. https://doi.org/10/gng6w9.

AI in Health

Morley, Jessica, Caio C. V. Machado, Christopher Burr, Josh Cowls, Indra Joshi, Mariarosaria Taddeo, and Luciano Floridi. ‘The Ethics of AI in Health Care: A Mapping Review’. Social Science & Medicine 260 (2020): 113172. https://doi.org/10/gj6kmc.

Ethics of Care

Martin, Kirsten. Ethics of Data and Analytics. Boca Raton: CRC Press, 2022.

Law and Regulation

Chesterman, Simon. We, the Robots? Regulating Artificial Intelligence and the Limits of the Law. Cambridge: Cambridge University Press, 2021.

In Conservation

Terms:

- culturomics, iEcology 3

- smart forests, smarter ecosystems for smarter cities

For the background on the need to transform knowledge systems, see:

Fazey, Ioan, Niko Schäpke, Guido Caniglia, Anthony Hodgson, Ian Kendrick, Christopher Lyon, Glenn Page, et al. “Transforming Knowledge Systems for Life on Earth: Visions of Future Systems and How to Get There.” Energy Research & Social Science 70 (2020): 101724. https://doi.org/10/gh8nwf.

Tuia, Devis, Benjamin Kellenberger, Sara Beery, Blair R. Costelloe, Silvia Zuffi, Benjamin Risse, Alexander Mathis, et al. “Perspectives in Machine Learning for Wildlife Conservation.” Nature Communications 13, no. 1 (2022): 792. https://doi.org/10/gpfb46.

Jarić, Ivan, Ricardo A. Correia, Barry W. Brook, Jessie C. Buettel, Franck Courchamp, Enrico Di Minin, Josh A. Firth, et al. “IEcology: Harnessing Large Online Resources to Generate Ecological Insights.” Trends in Ecology & Evolution 35, no. 7 (2020): 630–39. https://doi.org/10/ggr4b3.

Scoville, Caleb, Melissa Chapman, Razvan Amironesei, and Carl Boettiger. “Algorithmic Conservation in a Changing Climate.” Current Opinion in Environmental Sustainability 51 (2021): 30–35. https://doi.org/10/gh743j.

This article is a brief historical review of approaches with some key tensions and concepts listed.

Pichler, Maximilian, and Florian Hartig. “Machine Learning and Deep Learning: A Review for Ecologists.” arXiv, 2022.

Key Challenges

Baseline (for training). Machine Learning and Deep Learning models have to rely on the available baseline. The baseline in most cases is already severely degraded. In other cases it is modified by what researchers describe as sustainable human use, as is the case with traditional cultures. However, the challenge in these cases that these culture have adopted different practices, are themselves recent, had dramatic impacts in the past, etc. Cf. the note on the Indigenous knowledge Therefore, it is challenging to use these patterns for baselines.

Subjectivity. The AI discourse presumes that training occurs in references to traces of objective conditions but all integrations in living communities are filtered through subjectivities of living beings, their senses, perceptions, cognitions, etc. How can machine and deep learning account for this? See the 'seeing through the eyes of a bird' approach and projects. Cf. the note on subjectivity

Humans and their current cultures impact living communities and skew the interpretation. This goes to the dataset collection, dataset interpretation, etc.

Future changes and new pressures such as climate change, deforestation, urbanisation, etc. put pressure on the capacities of AI models.

Can we call AI a source of "conservative innovations"? The idea here is that all machine learning discoveries are oriented towards the past because they depend on the interpretation of existing patterns.

The terminology of "naïve models", or the models that do not have initial constraints presumes some opposite models. What are they? Wise models? Practical proposals suggest constraining ecological models by physical laws, for example, to make them more grounded. Cf. with the mention of subjectivity above, is that another way to make them less naïve? How does this sit with our argument that naivety of such models helps to prevent bias?

Link this to the general direction that moves beyond conservation and sustainability towards more-than-human governance. "Machines for Justice" could be the slogan or some such. This potentially redefines the criteria for success towards equity from optimisation within existing and conservative systems.

Directions

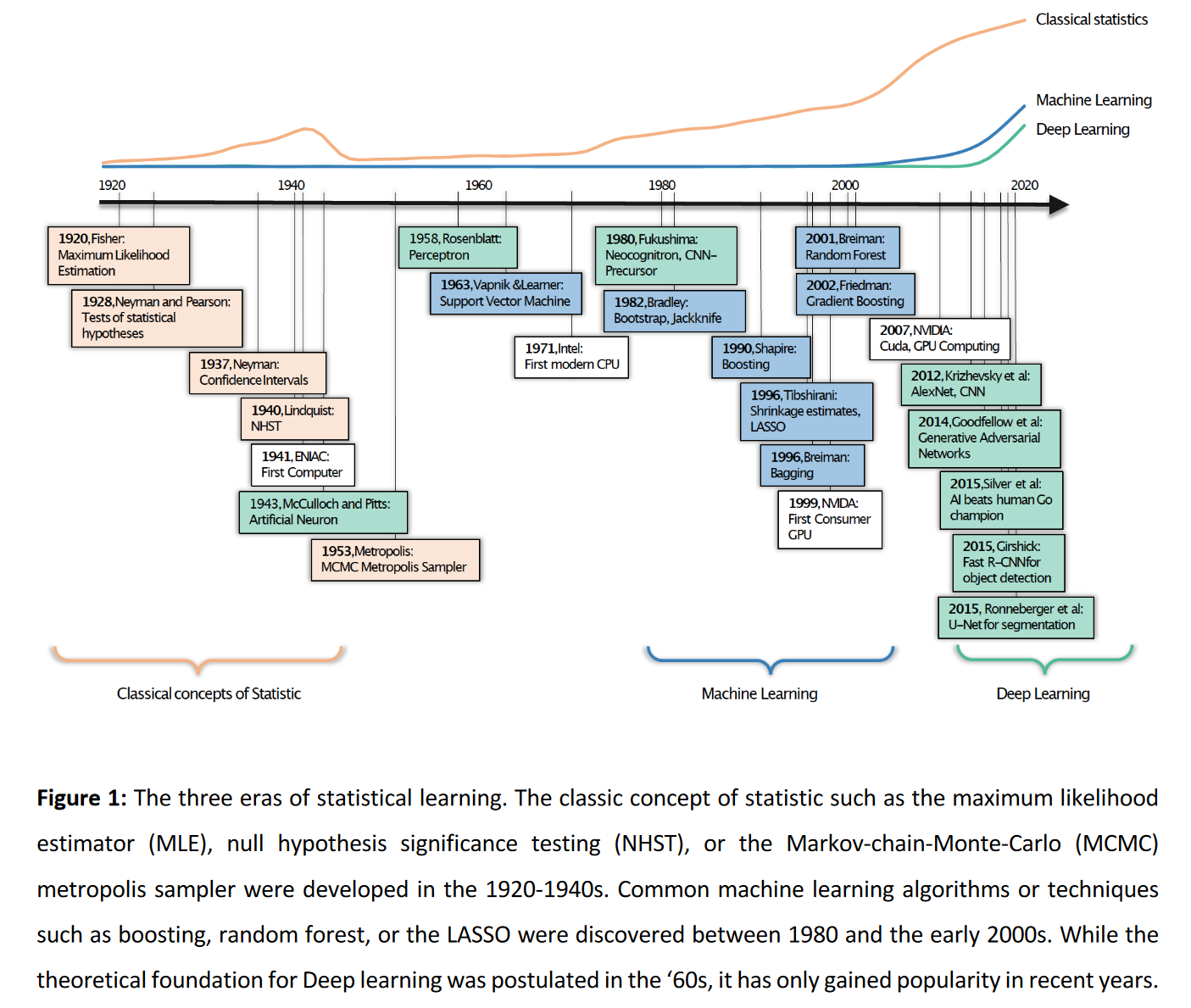

- Situate machine learning in the context of statistical models

- Consider the training datasets and ready-to-use pipelines such as those in gene sequencing or image recognition. The suggestion for the creation of large scale and shared datasets and it is already common in natural language processing

- On the same line: smart datasets with only partial retraining (can this be relevant to the perspective sensitivity to accommodate subjective biases?)

- Transparency and bias of decision based on machine learning and deep learning models

- Responsible AI 1,2 and its relevance to more-than-human collectives. The intersection between AI and social science disciplines (sociology, psychology, law).

- Fair and sustainable ML and DL models

- understanding their decisions

- identifying potential biases

- avoiding misuses by acknowledging limitations (e.g. the generalization ability for new datasets)

- Explainable AI and its relevance to more-than-human collectives

Cases

Google engineer put on leave after saying AI chatbot has become sentient | Google | The Guardian

AI in Architecture

This is some literature on the use of AI in the discipline of architecture. Recent and not much cited, in great contrast to the literature on AI elsewhere.

Armstrong, Helen, and Keetra Dean Dixon, eds. Big Data, Big Design: Why Designers Should Care About AI. Hudson: Princeton Architectural Press, 2021.

As, Imdat, and Prithwish Basu, eds. The Routledge Companion to Artificial Intelligence in Architecture. London: Routledge, 2021.

Bernstein, Phil. Machine Learning: Architecture in the Age of Artificial Intelligence. London: RIBA Publishing, 2022.

Chaillou, Stanislas. Artificial Intelligence and Architecture: From Research to Practice. Boston: Birkhäuser, 2022.

Darko, Amos, Albert P. C. Chan, Michael A. Adabre, David J. Edwards, M. Reza Hosseini, and Ernest E. Ameyaw. “Artificial Intelligence in the AEC Industry: Scientometric Analysis and Visualization of Research Activities.” Automation in Construction 112 (2020): 103081. https://doi.org/10/gg3xwx.

Leach, Neil. Architecture in the Age of Artificial Intelligence: An Introduction for Architects. London: Bloomsbury Visual Arts, 2021.

Steenson, Molly Wright. Architectural Intelligence: How Designers, Tinkerers, and Architects Created the Digital Landscape. Cambridge, MA: MIT Press, 2017.

AI Risks

Risks from Artificial Intelligence

CSI Transactions on ICT

References

Smith, Brian Cantwell. The Promise of Artificial Intelligence: Reckoning and Judgment. Cambridge: MIT Press, 2019.

Footnotes

Jarić, Ivan, Céline Bellard, Ricardo A. Correia, Franck Courchamp, Karel Douda, Franz Essl, Jonathan M. Jeschke, et al. “Invasion Culturomics and IEcology.” Conservation Biology 35, no. 2 (2021): 447–51. https://doi.org/10/gqbd75.˄

Barredo Arrieta, Alejandro, Natalia Díaz-Rodríguez, Javier Del Ser, Adrien Bennetot, Siham Tabik, Alberto Barbado, Salvador Garcia, et al. “Explainable Artificial Intelligence (XAI): Concepts, Taxonomies, Opportunities and Challenges Toward Responsible Ai.” Information Fusion 58 (2020): 82–115. https://doi.org/10/ggqs5w.˄

Wearn, Oliver R., Robin Freeman, and David M. P. Jacoby. “Responsible AI for Conservation.” Nature Machine Intelligence 1, no. 2 (2019): 72–73. https://doi.org/10/ghf44t.˄

Backlinks